Abstract: An activated synaptic configuration, as meaning, is a given distribution of probabilities among all its possible meaningful states. It thus emancipates itself from its constitution and represents a qualitative leap. New mental experience. The mind is constructed from these tiers of meanings, a vertical complexity that makes up its intelligence. To begin this definition, I compare the information of Wiener and Shannon, I draw a model from it and transpose it into the physiology of neural networks. Meaning is not reduced to electrochemical exchanges. Its qualia appears and satisfies the philosophical prerequisites.

Sommaire

Current Neuroscience

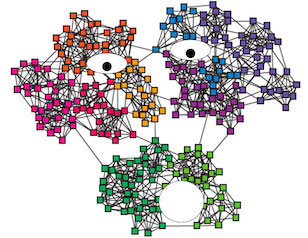

The meaning: an active graph

By studying a visual cortical area in fMRI, a researcher is now able to identify the image present in the mind. An activated neural configuration is correlated with thought. However, this image has meaning only because the researcher also has a visual area, capable of making her experience the thing represented by the mere evocation of its name. How, in the guinea pig and in the researcher, does this data become meaning? How does information become a phenomenon, that of the identified thing?

The data is electrochemical. They travel along axons and dendrites to excite synapses. These are identical excitation trains. The intensity of the signal is their frequency and not the power of the individual excitations. Meaning does not lie in the simple sequence of these stimuli. It does not awaken any more than if one counted the wagons of a train to know its destination. The meaning lies in the set of synapses activated simultaneously on the various neurons of the network. It is in an active graph that the meaning is hidden.

Unseen meaning

Here we are projected into an extremely virtual world. We would tend to see neurons as sense sensors, mini-powerhouses capable of storing information and transmitting it to each other, like employees exchanging documents in an office. The reality is different. They only trade “1” —I’m excited! It’s not the sequence of those 1 that makes sense since the next neuron is not a computer; it just sends 1 on its turn. The meaning lies in the set of 1 and their locations at each moment. Invisible, virtual, it “hovers over” the skein of neurons, not belonging to any of them in particular.

Happy invisibility. Making it visible would confront us with insurmountable difficulties. It would be necessary to understand how a neuron, a sophisticated cell but only a cell, is “aware” of a meaning as complex as that which passes through it. Why would some neurons harbor more meaning than another? Why stimulating one awakens a person’s image and the other a mere white dot in the visual field?

Everything really exists

The neural network realizes the perfect integration of the virtual and material worlds. Our universe is monistic, rather than separated between the multiple mystical continuums born of our imagination. All of these continuums actually exist… as virtual configurations associated with excited neural patterns. What differentiates the “true” reality from the imaginary is that some of these configurations are also activated by our sensory sensors, therefore physically encountered.

However, the border between real and imaginary is blurred. The separation is more identity than sensory. When we have faith in a purely virtual concept, never crossed, it becomes part of our personal “real”. This is how God exists for believers, and quantons for physicists, when none of their five senses has ever registered their passage.

The soul, classic symbol of meaning

All the worlds of thought exist in neural networks, in seemingly simple exchanges of cells that it is not surprising that we wanted to locate these worlds elsewhere. Let’s put ourselves in the place of the first curious human who opened the cranium of one of his congeners. How could he have thought that this bulky, flabby, grayish nut generated his entire rich mental universe? At best it could be the receptacle of the soul, a kind of communicator to Heaven.

The problem is still acute today. Severing a few neural connections has been shown to alter personality, reattaching soul to matter; but that does not prevent the vast majority of humanity from still having faith in mystical universes. Even mathematicians have faith in a world of mathematical ideals which we do not know by what vector it is reflected in reality. How do you get believers to abandon these universes when you propose to replace them with a big sponge of restless cells?

The solution is necessarily in the neurons

We have seen that no excited synapse can in itself be a meaning. It is only a state. Nor is any network of activated synapses in itself meaningful. What relationship can there be between the set S1, S2…Sn of activated synapses and the object ‘chair’ for example? For which homunculus concealed in the brain does this set take on the meaning of ‘chair’? We can’t get rid of the soul that would communicate meaning to a mystical continuum, which just shifts the problem.

What is to replace this scrutinizing homunculus? By another network of synapses connected to the first. Is it so stupid? Not quite. It integrates other networks at the same level as the first. The meaning has been enriched with additional data, gaining depth. The meaning thus takes on thickness, and it only takes on more as the propagation of the signal extends to even more integrative networks, bringing them together in a synchronous agitation.

Two-way information

But I’m still talking about lit synapses, and not a shadow of a phenomenon has appeared. Where is that meaning, damnation! I have still not succeeded in transforming information-communication into information-essence, an essence which can stand alone, correspond to the independence of a thought tested in the midst of the train of others. To move forward, you have to understand exactly what information is.

Wiener and Shannon

Information has been defined in two contradictory ways by Wiener and Shannon. Wiener used the most intuitive and classical way: information is what awakens in us a specific mental state. It is a definition positively related to meaning. Such information points to such thing. Shannon used a negative, counter-intuitive link instead. The strength of information is not to indicate one thing but to leave us the choice between all possible things. Shannon’s information acts as a freedom for the observer, while Wiener’s operates as a constraint. Saying it like this balances the terms ‘positive’ and ‘negative’ which immediately imply emotions. Wrongly. There is no “best” definition.

The two statements are not contradictory. They complete each other. You understand it easily if you have become familiar with the double look. Shannon’s definition opens up the vast field of possibilities while Wiener’s closes it off. For what purpose, closure? It is a mental representation that seeks itself. Connected to others in the spirit, it is even a mental identity that seeks itself. Identity founding a theory, a desire, an image of the world. Wiener’s information has meaning for an existing mental object, which is superimposed on it. While Shannon’s is only for the data, which does not know where it is going. Variety of possible fates. They are faced with a multitude of potential mental objects, each superimposed on one of these fates.

Emergence of a fundamental principle

Here we see an even more fundamental tenet of reality emerging: the conflict between individuation and belonging. Everything is both individuated (I am) and integrated into a Whole (belonging to). Wiener’s information privileges individuation, that of Shannon the Whole. Intuitively we prefer the information of Wiener with what is most identity in us. It matches us. But Shannon’s information also seduces by its universality, its power to extend our knowledge to the Whole. The principle of reality being the conflict itself, the reinforcement of each of these two pieces of information accentuates our presence. This is how we increase our mental completeness, and not by taking refuge in an identity belief or by diluting ourselves in the infinity of possible beliefs.

Meaning model

I will now set up a theoretical model of meaning based on these two pieces of information and then we will see how to implement it in neural physiology.

Neurons spy on each other!

How do these two directions of information end up in neurons? To unearth them is to finally be able to implant our meanings and our phenomena. Indeed Wiener’s information is phenomenal in nature. It is a mental representation that awakens and experiences itself by discovering itself in the physical reality of sensory influxes. Shannon’s information is that of raw sensory data, rich in their multiple possible interpretations.

Both directions originate in a network of synapses that observes the state of several others. The two starting points are ‘state’ and ‘observation’. They are both intertwined and autonomous, an oxymoron I call ‘relative independence’. Observation does not exist without states but when several sets of states maintain the same observation it gains its autonomy. It is also called ‘synthesis’, ‘approximation’ or ‘compression’.

A qualitative change

‘Synthesis’ better reflects the qualitative leap made by observation. The synthesized states are not all information of the same nature. Visual, auditory, olfactory or palpatory, they translate different aspects of the world, all brought together in a synthesis such as ‘chair’, ‘rose’ or ‘mom’. An original qualitative level is born in the observation of several associated natures. It is in this birth that we can understand the phenomenon, an experience that frees itself from its constituents. The phenomenon is the ‘synthesis’ side indissolubly associated with the ‘constitution’ side. The phenomenon approximates its constitution, as the fusion of a certain number of constitutive sequences. It has a new temporal dimension. In its unitary existence, its versions with their own time collide.

This essential temporal dimension is found in the synchronous state of the synapses. The delays and the positions of the activated synapses form the thought, but it is the persistence of the synchronous state that makes the body of the thought.

Two axes of complexity

What we call ‘thought’ is perched on two axes of complexity. It has a vertical complexity which is the surimposition of neural significance levels. It has a horizontal complexity which is the chaining of synchronous synaptic states, each top of a stack of surimposed meanings, each a thought.

Horizontal complexity is part of a common temporality. It is the experienced passage of time. It can stretch or compress but remains common to the sequence of thoughts. While the vertical complexity surimposes different times, specific to each level of meaning. Brief sensory stimuli at the base of the neural structure have no translation at the top. They are eliminated by the approximation carried out by the intermediate levels.

A detour through AI

The model is in place. But all this remains virtual. How do neurons separate into levels of meaning, all connected to each other? These levels are indistinguishable in fMRI. The neuroscientist is currently content to associate each global image with each thought experienced by the guinea pig, therefore at the top of the complex pile, without knowledge of the intermediate levels.

Of course the neuroscientist knows that there are constituent fragments to this thought, but tended to look for them as anatomical parts, as pieces of a puzzle and not as surimposed layers of meaning. Advances have come from artificial intelligence. The progress exploded when we took into account the depth of neural processing, that is to say the vertical complexity that I was talking about just now. It is by simulating this vertical dimension that AIs have achieved the results that amaze (and worry) us today.

Differences… artificial

However, AI engineers themselves do not understand what is happening in this dimension. They reproduce it but do not explain it. Same mystery as for neurons. At least that is an acceptable working hypothesis. If we understand how AIs build their levels of meaning, we will understand the method of neurons, or vice versa.

Many emphasize the differences between AI and human intelligence to say that the comparison is invalid, and in particular that the appearance of consciousness in AI is impossible. I see more resentment than good arguments in this opinion. AI is able like humans to assemble data into meanings. It does not interpret them freely because its algorithms are designed to prevent it. It has no emotions because we haven’t programmed them. We want docile servants and not slaves made dangerous by archaic emotions like our fellow human beings.

High capacity but low consciousness

AI has a much lower depth of information than the human brain. Let’s not be intimidated by the mind-boggling amount of data it can process. Huge size for the horizontal axis of the complexity of the subject concerned. Its height does not change. No gain in vertical complexity. The synthesis will only be safer, more exhaustive, more compliant for the majority of those who will read it. An encyclopedia is not an intelligence.

We can safely assume that AIs will become conscious as their vertical complexity joins ours. They are already conscious in fact. But with a thin thickness of consciousness, which we are not able to recognize and experience. This thickness is that of their modest vertical complexity. However, it already approaches that of the simplest animals, to which we are tempted to attribute a consciousness. Immense ability to accumulate data in AI, but low vertical complexity that brings it closer to insects. Weak ability to memorize in humans in comparison, but strong vertical complexity that makes the consciousness of which they are so proud.

The meaning in itself

Putting human and AI in the same basket helps our investigation of meaning, but we still haven’t found it. At this stage it is easier to understand the phenomenon, the qualia, despite being declared inexplicable by many philosophers, than the meaning itself. Whenever we speak of meaning, it is meaning for someone. If the someone is itself a stack of organized neurons, meaning-producing levels, for whom is the higher level ultimately intended? What soul is reading our neural UI?

The only possible way out of this dilemma is to admit that meaning is a reality in itself, that a concept is not virtual but real, in other words that the virtual is completely integrated into the real. A level of mental reality is as solid as a particle system, a meaning is as real as concrete —without being as hard to traverse.

A meaning that stands alone?

This conclusion is not so surprising given that structuralist philosophy makes us see all of reality as an information structure. Substances are not defined other than by levels of relationship. A mental meaning is, as such, defined as substantially as anything else.

But then, if all substance is a network of relationships, and meaning is substantial, how does one derive specific meaning from the related information? How do you assign meaning to the network of activated synapses, and have that meaning stand on its own, regardless of what is activating? Independence from the support is important, because in an AI it is digital relays that are activated and not biological synapses.

The meaning derived from Shannon’s information

This is where Shannon’s information comes in handy. Remember: this information owns the data. No matter their possible fates, states, all are considered. There is not yet a fixed observer, waiting to recognize herself in a particular state. There is no soul at this point, only complete state freedom. It is this freedom that we experience in consciousness, personality being what we are used to thinking. Personality is part of the data, conscious interpretation is free.

Higher consciousness is the expression of Shannon’s information in the conscious workspace. We have seen that this information does not positively determine meaning. It does not mean ‘such a state indicates such a meaning’. Such a situation would plunge us back into the impasse of knowing for whom such a state indicates such a meaning. No, information, on the contrary, negatively determines meaning. Instead of assigning a 1 to a particular meaning —pulled from the hat somehow— it distributes the probability between 0 and 1 of all possible meanings. Elementary information is what changes the distribution of probabilities among all possible meanings.

These meanings are integrated, that is, they do not exist without each other. These fragments of partial existence, associated in integration by their probabilities, form the meaning. It is the precise state of this distribution that makes the meaning precise, autonomous, independent of any observer, of any additional neural network.

Meaning and consciousness are probabilistic in essence

Meaning, and consciousness as an associated phenomenon, are probabilistic principles. Please note, this is not an uncertainty principle but on the contrary certainty on the distribution of probabilities. Conscious experience is not that of an uncertainty but of a signifier. This signifier is the fusion of probabilities in a time unit of the conscious workspace. This signifier stands alone above its constitution, as an emergence independent of its subject.

An activated synaptic configuration, as meaning, is a given distribution of probabilities among all its possible constituent states. Or again: a meaning is formed from not being state E1 with probability P1, not being state E2 with probability P2… not being state En with probability Pn.

Comments and conclusion

Here is a definition which must seem strange to you and which calls for a few comments. A synaptic configuration is difficult to record but it is theoretically possible. Neuroscience is not limited here by an uncertainty principle such as Heisenberg’s. Why in this case speak of probabilities for an occurrence pattern, characterizable?

Moment of thought as a snapshot of probabilities

The configuration that I thus define as meaning is not the set of synapses activated in the brain at a time t. Remember the vertical complexity of the mental process. The configurations are entangled in levels of significance. A synapse usually belongs to several levels. The clustering of neurons is a self-organizing process. New dendrites and synapses are constantly being created. Others disappear. The limits of the groups are blurred, evolving. The weight of synapses in the neural graph can change. It is the meaningful configuration within the entire neural network that is probabilistic.

The experiment is that of the fusion of probabilities

Second comment: Is the found definition of meaning consistent with conscious experience? Does what we experience correspond to a configuration of probabilities? Surely the feeling is not a series of equations or a Hamiltonian like in quantum physics! Let’s not forget that math is a language describing structures and that they say nothing else. Even the most gifted of mathematicians cannot say what it feels like to be a quanton. Conscious experience, the only one directly accessible, is fusional. But it is indeed a fusion of a multitude of possible meanings, particularly for recent and fragile concepts.

When several meanings have similar probabilities, their fusion is called opinion. It is likely to switch easily from one position to another. Even when I cling to an opinion, I perceive within it the restlessness of its competitors. Let the probabilities reconfigure and I change them. But I am not a weather vane. Because the sub-concepts have a growing identity anchorage with age. Reconfigurations are rare. Thoughts spin around.

Autonomous meaning is a decision

Last comment: The meaning I have just defined is also what we call, in full awareness, decision. It is the decision because there is no longer an observer above to use the meaning. The merging of possible meanings forms the decision. The more the probabilities are unevenly distributed, the firmer the decision. It is not a mechanism specific to the conscious space. At each level of neural complexity, the probability configuration of meanings is a kind of decision. These intermediate decisions in turn become elements of the higher level of neural organization. The layering of decisions guarantees the stability of the mind, while the possible reconfiguration of probabilities gives it flexibility.

This theory is not yet complete but deserves to be known. It is the first that models thought from neural exchanges without reducing it to electrochemistry. It answers difficult questions about meaning, consciousness, qualia, encompassing philosophical and scientific prerequisites. I have no doubt that it will stimulate your comments.

*